Oxford Robotics Institute | Projects - THING EU

THING EU

The subTerranean Haptic INvestiGator Project, or THING for short!

The subTerranean Haptic INvestiGator Project, or THING for short, is an EU H2020 funded project which brings together lead researchers to focus on haptic navigation for walking robots. The project will focus on developing new terrain sensing, estimation and control technology for the Anymal quadruped. In addition to ORI, partners will include ETH Zurich, Poznan University of Technology, Universities of Edinburgh and Pisa as well as industrial partners such as ANYbotics, KGMH Cuprum (a major Polish mining company) and the City of Zurich.

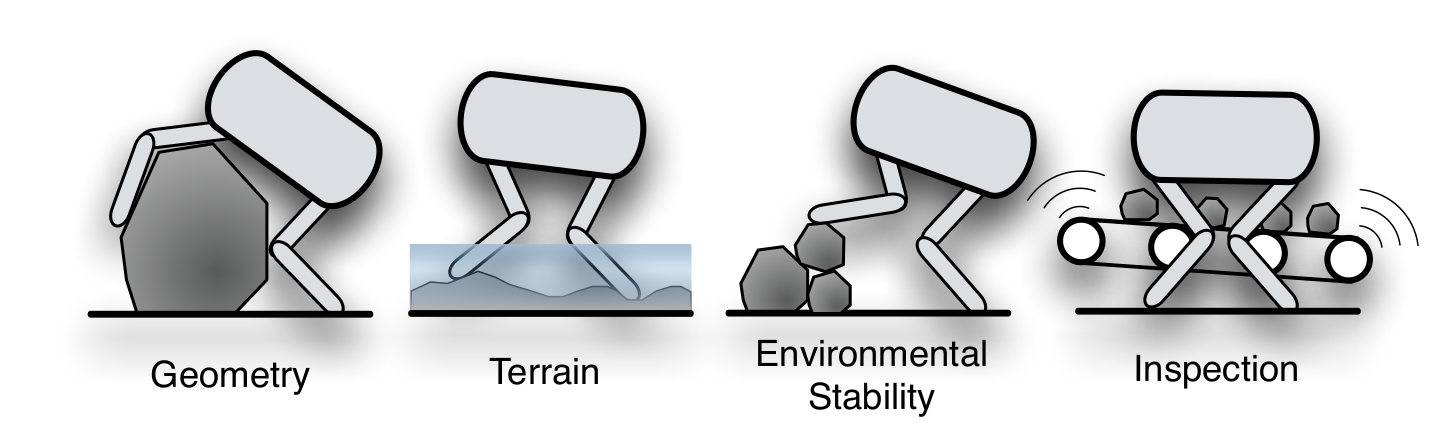

Compared with wheels, legs can provide superior mobility and agility. Scaling obstacles, leveraging the environment (e.g. for support, for pushing-off), adapting to the condition of terrain, and executing dynamic manoeuvres like jumping or bounding, are some capabilities of legged locomotion. However, such advanced mobility requires advanced perception. To plan an aggressive manoeuvre, for example, the robot should know the geometry of the environment (i.e. where it can place its feet), but critically, the robot needs awareness of its physical properties (e.g. friction of a wet slope, compliance of soft dirt, stability of a rock formation), in order to decide where it ought to place its feet.

While legged robots have traditionally relied on vision, e.g. 3D-cameras or LiDAR, to survey geometry via point clouds, this modality is often limiting. Sensors may be ill-positioned (i.e. not near the ground or feet), have insufficient resolution, and may degrade in perceptually challenging settings with smoke, dust, poor lighting, or standing water. Fundamentally, vision is perception from a distance, and it is challenging to ascertain the physics of an environment without contact.

Thus, the aim of THING is to advance the perception ability of legged robots through haptic modality. The inspiration for this project is the ‘walking hand’ 1 : a robot capable of a high degree of mobility, while able to exploit legs and feet for haptic perception: sensing the shape, compliance, and friction of the environment as it interacts with it. Like fingers of a hand, legs can probe, stroke, and feel the terrain precisely where it matters most. Unlike wheels or tracks, legs can apply controlled forces to the environment and sense their consequences. We believe that such perception through physical interaction is an essential step towards autonomy: enabling robots to develop a physical construct of the world, and reason about possible outcomes of action.

- ORI Principal Investigator: Maurice Fallon

- ORI Groups Involved: Dynamic Robot Systems

- Official Project Webpage: http://thing-h2020.eu/